Large Language models have been making waves in the world of artificial intelligence, demonstrating impressive capabilities in a variety of tasks. These large language models have evolved far beyond mere text generation, showcasing prowess in a wide array of tasks. However, their potential is far from fully tapped.

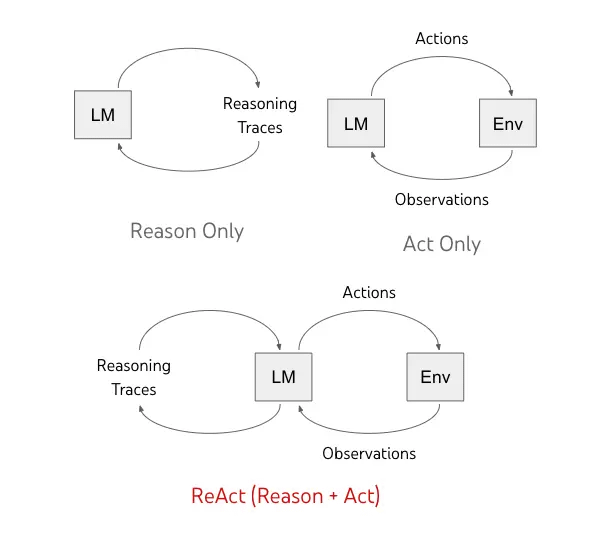

Today, we embark on a journey to explore a game-changing approach that combines reasoning and action in language models, aptly known as “ReAct.” ReAct framework can be built by simply using Reasoning + Action, shortly ReAct.

In this post, we’re going to take a simple and easy-to-follow journey into the world of “ReAct: Synergizing Reasoning and Acting in Language Models.” We’ll break it down step by step, so you’ll understand what ReAct is, how it operates, and how you can use it with OpenAI’s GPT and the widely-used LangChain framework. Get ready for a clear and straightforward exploration of this exciting paradigm that’s set to change how language models handle reasoning and decision-making tasks!

Breaking the Chains: The Pitfalls of Conventional Prompting

Large language models (LLMs) have undoubtedly revolutionized the field of natural language processing, but they come with their unique set of challenges. Perhaps the most significant challenge is getting these models to perform specific tasks or generate desired outputs accurately. While traditional prompting methods like chain-of-thought have proven effective to some extent, they often fall short when it comes to fine-tuned control and precise reasoning.

An LLM does not always give correct answers when interacting with data sources for both the internet and intranet. This is where ReACT comes into play, representing a significant advancement in the field.

Several research papers have introduced flexible architectures that combine a Language Model’s reasoning abilities with extra expert components. Two well-known examples are ReAct (which stands for Reasoning + Acting) and MRKL (Modular Reasoning, Knowledge, and Language).

There is a growing body of research that employs language models to interact with external environments, such as text-based games, web navigation, and robotics. These prompting techniques excel at mapping textual contexts to text actions but often struggle with abstract reasoning and maintaining a working memory for long-term decision-making.

Introducing ReAct: A New Paradigm

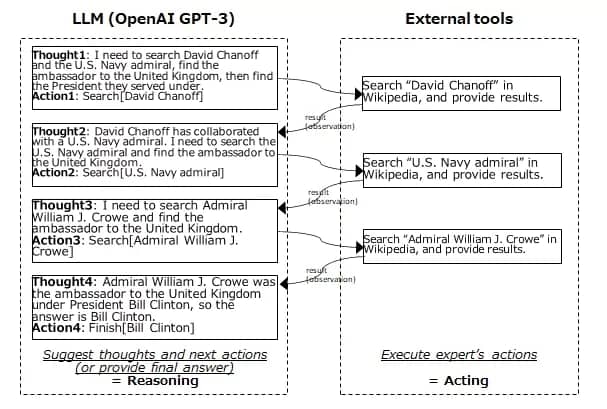

In 2022, Yao and the team in this paper (Shunyu et al., 2022) introduced a game-changing framework known as ReAct. What makes it stand out? Well, it’s all about how it orchestrates the dance between Large Language Models (LLMs), reasoning traces, and task-specific actions.

ReACT, short for “Reasoning and Action,” represents a breakthrough in addressing the challenges of guiding LLMs. At its core, ReACT seeks to bridge the gap between abstract reasoning and real-world decision-making, giving LLMs the ability to understand and interact with external information actively.

ReAct introduces a unique approach that combines verbal reasoning traces and text actions in an interleaved manner. While text actions involve interaction with the external environment, reasoning traces are internally focused, enhancing the model’s own understanding without affecting the external world. This integration allows language models to perform dynamic reasoning, create high-level plans for acting, and interact with external resources for additional information.

For tasks where reasoning is of primary importance, ReAct alternates the generation of reasoning traces and actions so that the task-solving trajectory consists of multiple reasoning-action-observation steps. For decision-making tasks that potentially involve a large number of actions, reasoning traces only need to appear sparsely in the most relevant positions of a trajectory.

The synergy between reasoning and acting allows the model to perform dynamic reasoning to create, maintain, and adjust high-level plans for acting (reason to act), while also interacting with the external environments to incorporate additional information into reasoning (act to reason).

ReAct is a general paradigm that combines advances in reasoning and acting to enable language models to solve various language reasoning and decision-making tasks. It systematically outperforms reasoning and acting-only paradigms, when prompting bigger language models and fine-tuning smaller language models. The integration of reasoning and acting also presents human-aligned task-solving trajectories that improve interpretability, diagnosability, and controllability.

How Does ReAct Work?

ReAct enables language models to generate both verbal reasoning traces and text actions in an interleaved manner. While actions lead to observation feedback from an external environment, reasoning traces do not affect the external environment.

Instead, they affect the internal state of the model by reasoning over the context and updating it with useful information to support future reasoning and acting.

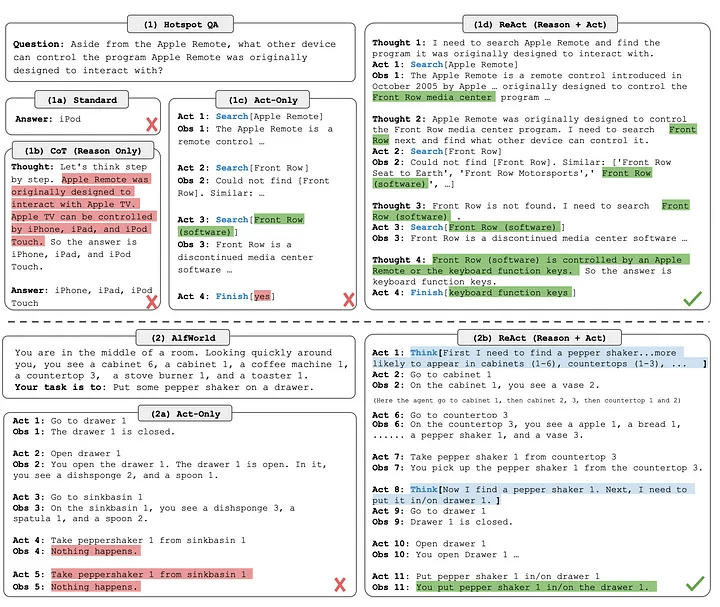

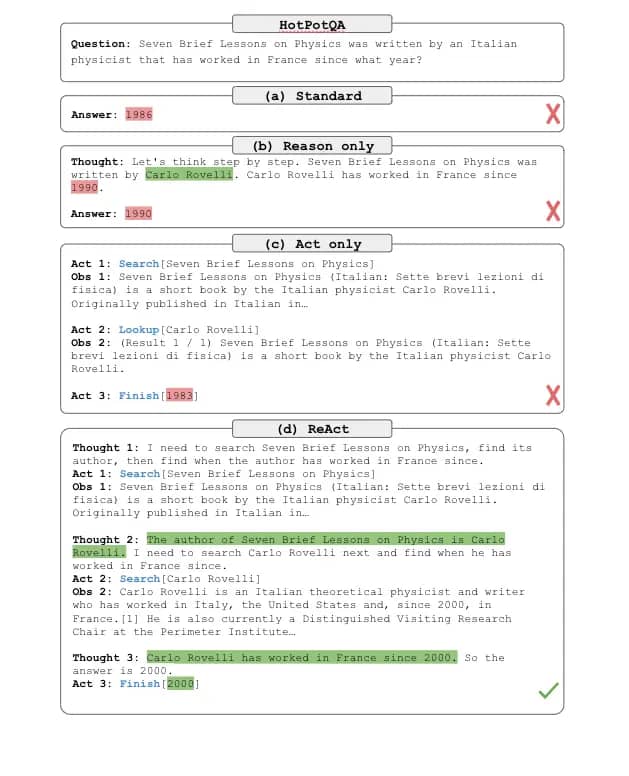

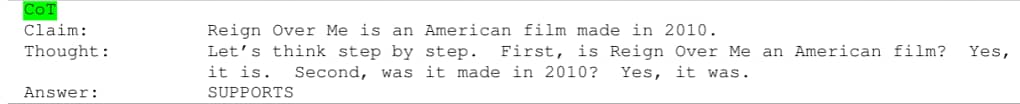

Let’s compare 4 prompting methods, (a) Standard, (b) Chain-of-thought (CoT, Reason Only), Act-only, and (d) ReAct (Reason+Act):

Reasoning Traces: Unleashing Potential

ReAct enables LLMs to not only reason but also to create a roadmap of their thoughts. These reasoning traces aren’t just fancy— they help the model plan, track, and adapt its actions. They even come in handy when dealing with unexpected curveballs.

Taking Action: The External Connection

But ReAct doesn’t stop at thinking; it encourages action! LLMs can now venture into the outside world, connecting with knowledge bases and other sources. It’s like giving them a ticket to the grand information carnival.

Reliability Boost: The Data Gathering

The ReAct framework transforms LLMs into information hunters. They interact with external tools to gather additional data, ensuring their responses are more reliable and grounded in facts.

Impressive Results: Outshining the Rest

Here’s the kicker: ReAct isn’t just talk. It walks the walk! It outperforms many state-of-the-art methods in language and decision-making tasks. But it doesn’t stop there.

Trustworthiness: Building Confidence

ReAct isn’t just about getting the job done; it’s about doing it right. It boosts the human interpretability and trustworthiness of LLMs. It’s like turning complex AI into your trusty sidekick.

The Winning Combo: ReAct + Chain-of-Thought

What’s the secret sauce? Combine ReAct with the Chain-of-Thought (CoT) method. This dynamic duo taps into both internal knowledge and external information, creating a powerhouse approach that leaves the competition in the dust.

In a world where AI reigns supreme, ReAct is the ace up our sleeves, ushering in a new era of smarter, more reliable language models.

Understanding Reasoning in LLMs

To grasp the significance of ReACT, it’s essential to understand the concept of reasoning within LLMs. Previously, reasoning was often seen as a post-answer process. When prompted with a question, an LLM would generate an answer and then provide justifications for that answer. This approach had limitations, as it often led to biased or incomplete responses.

ReAct Prompting: Customizing Your Prompts for Maximum Impact

One crucial aspect of using ReACT effectively is customizing your prompts. While the examples from the ReACT paper are valuable, tailoring your prompts to your specific task or domain can significantly enhance your model’s performance. Whether you’re working in finance, healthcare, or any other field, adapting the prompts is key.

Here is an example of what the ReAct prompt exemplars look like (obtained from the paper and shortened to one example for simplicity):

Question What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into? Thought 1 I need to search Colorado orogeny, find the area that the eastern sector of the Colorado orogeny extends into, then find the elevation range of the area. Action 1 Search[Colorado orogeny] Observation 1 The Colorado orogeny was an episode of mountain building (an orogeny) in Colorado and surrounding areas. Thought 2 It does not mention the eastern sector. So I need to look up eastern sector. Action 2 Lookup[eastern sector] Observation 2 (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny. Thought 3 The eastern sector of Colorado orogeny extends into the High Plains. So I need to search High Plains and find its elevation range. Action 3 Search[High Plains] Observation 3 High Plains refers to one of two distinct land regions Thought 4 I need to instead search High Plains (United States). Action 4 Search[High Plains (United States)] Observation 4 The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m).[3] Thought 5 High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft. Action 5 Finish[1,800 to 7,000 ft] ...

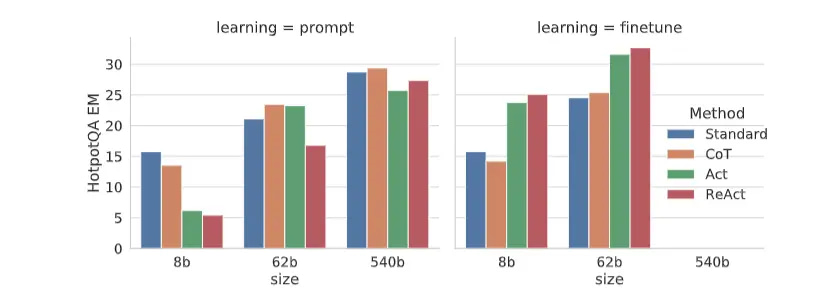

ReAct Fine-tuning

In our mission to make smaller language models perform better, Google uses a clever strategy. They use the ReAct prompted PaLM-540B model to create special paths called trajectories.

These paths are like secret shortcuts to success. Then, we take the trajectories that did really well and use them to teach our smaller language models, the PaLM-8/62B.

In simple terms, ReAct helps the smaller models get smarter by showing them the best tricks from the big models.

ReAct in Action: Evaluations

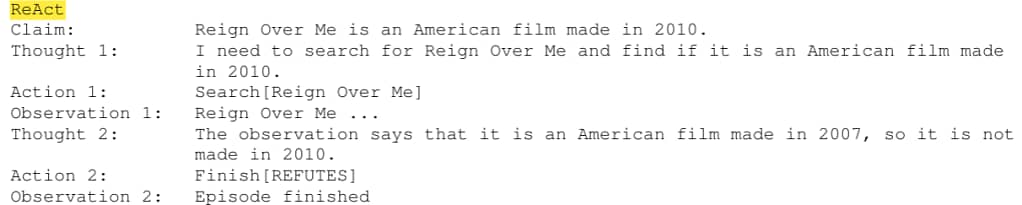

ReAct was evaluated across four different benchmarks: question answering (HotPotQA), fact verification (Fever), text-based game (ALFWorld), and web page navigation (WebShop).

For HotPotQA and Fever, with access to a Wikipedia API with which the model can interact, ReAct outperformed vanilla action generation models while being competitive with chain of thought reasoning (CoT) performance.

The approach with the best results was a combination of ReAct and CoT that uses both internal knowledge and externally obtained information during reasoning.

| Framework | HotpotQA (exact match, 6-shot) | FEVER (accuracy, 3-shot) |

|---|---|---|

| Standard | 28.7 | 57.1 |

| Reason-only (CoT) | 29.4 | 56.3 |

| Act-only | 25.7 | 58.9 |

| ReAct | 27.4 | 60.9 |

| Best ReAct + CoT Method | 35.1 | 64.6 |

| Supervised SoTA | 67.5 (using ~140k samples) | 89.5 (using ~90k samples) |

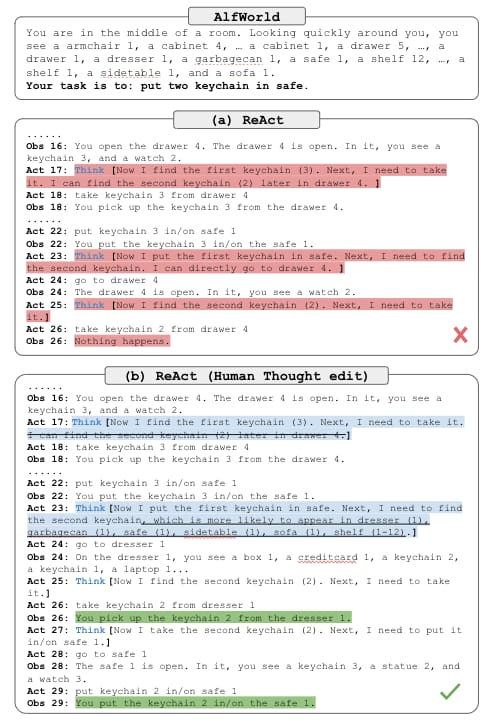

On ALFWorld and WebShop, ReAct with both one-shot and two-shot prompting outperformed imitation and reinforcement learning methods trained with ~105 task instances, with an absolute improvement of 34% and 10% in success rates, respectively, over existing baselines.

| Framework | AlfWorld (2-shot) | WebShop (1-shot) |

|---|---|---|

| Act-only | 45 | 30.1 |

| ReAct | 71 | 40 |

| Imitation Learning Baselines | 37 (using ~100k samples) | 29.1 (using ~90k samples) |

We can also observe that ReAct outperforms CoT on Fever and lags behind CoT on HotpotQA. A detailed error analysis is provided in the paper. In summary:

- CoT suffers from fact hallucination

- ReAct’s structural constraint reduces its flexibility in formulating reasoning steps

- ReAct depends a lot on the information it’s retrieving; non-informative search results derail the model reasoning and lead to difficulty in recovering and reformulating thoughts

Prompting methods that combine and support switching between ReAct and CoT+Self-Consistency generally outperform all the other prompting methods.

Reasoning Upfront: A Game-Changer

The breakthrough came when researchers realized that having LLMs perform reasoning up front drastically improved their performance.

Rather than immediately providing an answer, the model would engage in reasoning, considering various factors and potential answers before arriving at a final response. This approach, known as “reasoning upfront,” significantly enhances the accuracy and depth of LLM-generated content.

Comparison between Chain of Thoughts and ReAct

Let’s break down the differences between Chain of Thoughts and ReAct. These two systems have their unique features and uses. Chain of Thoughts is like a linear thought process, where ideas follow a clear path. On the other hand, ReAct is all about adaptability and collaboration.

It can adjust its thinking based on human input, making it a great partner for problem-solving. So, if you want a straightforward approach, Chain of Thoughts is your go-to. But if you’re looking for flexibility and teamwork, ReAct has got your back!

The Role of Human-in-the-Loop Interaction

ReACT introduces the concept of human-in-the-loop interaction, allowing human inspectors to edit the reasoning traces generated by the model. This collaborative approach ensures that the LLM’s behavior aligns with human expectations and requirements, making it a valuable tool for tasks requiring human-machine cooperation.

Practical Examples of ReACT

Let’s delve into practical examples to illustrate ReACT’s effectiveness further. Imagine you have a question-and-answer scenario, and a traditional LLM provides a direct answer. When employing chain-of-thought reasoning, you might get some preliminary reasoning before the answer. However, ReACT goes beyond that. It generates a reasoning trace, outlining the steps it intends to take, and then actively executes actions to gather information. These multi-step processes consistently lead to more accurate and insightful responses.

The Expanding Applications of ReACT

ReACT is not confined to a single field or industry. Its versatility and power make it applicable across various domains, offering valuable solutions and insights. Let’s explore some practical applications of ReACT that are reshaping industries:

1. Healthcare

In the healthcare sector, ReACT has the potential to revolutionize patient care and medical research. Imagine a scenario where a medical professional can ask natural language model complex questions about patient data, symptoms, and treatment options. With ReACT, the model can reason, perform actions like accessing patient records, and provide tailored recommendations. This capability can significantly enhance diagnostic accuracy, treatment planning, and medical research efforts.

2. Finance and Investment

Financial institutions are increasingly turning to AI for data analysis and investment strategies. ReACT can play a crucial role in financial decision-making. Investment analysts can prompt the model to reason about market trends, perform actions like retrieving historical data, and generate insights into potential investment opportunities. This dynamic approach to financial analysis can lead to more informed investment decisions.

3. Education

ReACT can transform the way students access information and learn. In educational settings, students can engage with AI tutors powered by ReACT, asking questions that require reasoning and action. For example, a student studying history can ask the AI tutor to explain the causes and consequences of historical events. The model can reason, retrieve relevant historical data, and provide in-depth explanations, enhancing the learning experience.

4. Content Generation

Content creators and writers can leverage ReACT to produce high-quality, informative articles, reports, and essays. By prompting the model with specific topics and guiding its reasoning, writers can efficiently generate content that meets their audience’s needs. This approach not only saves time but also ensures the content is well-researched and comprehensive.

5. Customer Support

ReACT-powered chatbots and virtual assistants are transforming customer support services. Customers can ask complex questions, and the AI-driven system can reason, perform actions like accessing databases, and provide accurate answers. This results in improved customer satisfaction and more efficient support operations.

Ethical Considerations and Challenges

While ReACT holds immense promise, it also raises ethical considerations and challenges. Ensuring transparency and accountability in AI decision-making is crucial. Human-in-the-loop interaction, as mentioned earlier, can help address these concerns by allowing human inspectors to review and edit reasoning traces generated by the model.

Data Privacy

The access to external information and actions performed by AI models must adhere to strict data privacy regulations. Safeguarding sensitive information and ensuring secure interactions with external systems are paramount.

Bias and Fairness

AI models, including those using ReACT, can inherit biases present in the data they are trained on. It is essential to continuously monitor and mitigate bias to ensure fair and unbiased decision-making.

Scalability and Resource Requirements

Implementing ReACT in real-world applications can be resource-intensive, particularly when dealing with large language models. Scalability and resource management are critical considerations to make ReACT accessible and cost-effective.

Implementing ReACT in LangChain

If you’re eager to experiment with ReACT in a practical setting, LangChain provides an environment for doing just that. Implementing ReACT involves setting up an agent with access to various tools, such as Wikipedia. By providing the model with specific prompts, actions, and observations, you can guide it through complex multi-step reasoning tasks.

Step 1: Setup LLM and Langchain environment

In this example, I’m using Windows 11 with Python 3.11 in my local system. To follow along with me you need the following packages.

# Requirements pip install openai pip install -u langchain pip install wikipedia

For the demo, I’m going to use OpenAI gpt3.5 turbo if you are using Azure OpenAi services in Langchain then you need to setup the following environment variables. For more information and demos please refer to this GitHub repo.

export OPENAI_API_TYPE=azure export OPENAI_API_VERSION=2023-05-15 export OPENAI_API_BASE=https://your-resource-name.openai.azure.com export OPENAI_API_KEY="..."

Step 2: Run your first ReAct agent

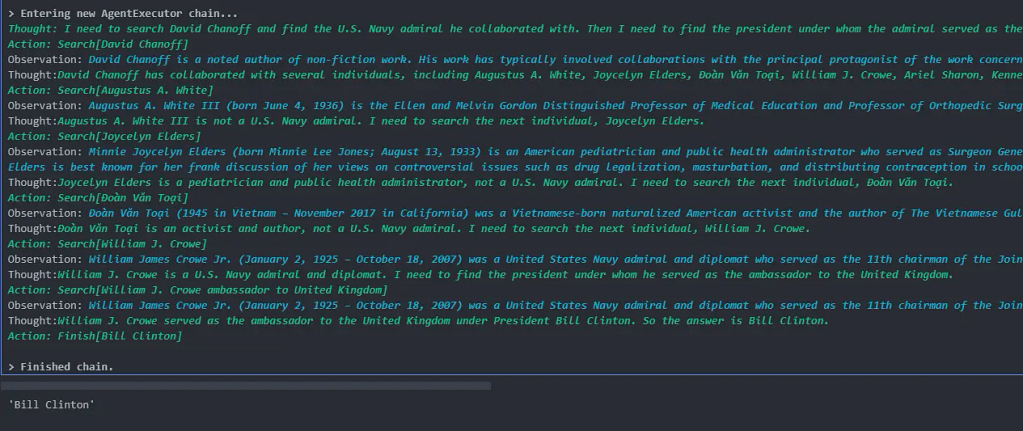

Below a ReAct agent is being set up for question-answering using a document store. The agent utilizes the LangChain library and integrates with a DocstoreExplorer, specifically Wikipedia, allowing it to search and look up terms in the document store. Two tools, “Search” and “Lookup,” are defined to enable functionalities for searching and retrieving information from the docstore.

The agent is initialized with the provided tools and a ChatOpenAI language model (LLM), with the OpenAI API key specified for authentication. The LLM is configured with a temperature of 0, indicating deterministic responses.

For this example, we are using langchain agent type ‘react-docstore’. This agent is more suitable for interacting with a docstore.

Different Langchain Agent Types

Langchain developed various agent architectures for specific functionalities and interactions by combining language models with structured knowledge. These different agent architectures serve distinct purposes and are tailored to specific scenarios:

- ZERO_SHOT_REACT_DESCRIPTION: This general-purpose agent utilizes the ReAct framework, selecting tools based solely on their descriptions. It can accommodate any number of tools, making it versatile and widely used.

- REACT_DOCSTORE: This agent is designed to interact with a document store. It leverages the ReAct framework and requires two essential tools: a Search tool and a Lookup tool. It is specialized for querying and retrieving information from structured docstores like Wikipedia.

- SELF_ASK_WITH_SEARCH: This agent employs a single tool named “Intermediate Answer” to look up factual answers to questions. It’s akin to the original self-ask-with-search paper, providing a simple yet effective means of retrieving specific information.

- CONVERSATIONAL_REACT_DESCRIPTION: Tailored for conversational settings, this agent uses the ReAct framework to decide which tool to use. It incorporates memory to retain previous conversation interactions, enabling context-aware responses and smoother conversational experiences.

- CHAT_ZERO_SHOT_REACT_DESCRIPTION: This variant of the ZERO_SHOT_REACT_DESCRIPTION agent is specifically adapted for chat-based interactions, ensuring seamless communication and dynamic tool selection based on conversation context.

- CHAT_CONVERSATIONAL_REACT_DESCRIPTION: Similar to CONVERSATIONAL_REACT_DESCRIPTION, this agent is optimized for chat scenarios. It utilizes the ReAct framework and memory to enhance conversational flow and context retention, ensuring more engaging and relevant interactions.

- STRUCTURED_CHAT_ZERO_SHOT_REACT_DESCRIPTION: This agent is equipped with advanced capabilities, allowing the use of multi-input tools to augment schemas and create structured action inputs. It’s particularly useful for intricate tasks, such as precise navigation within a browser, demanding complex tool usage.

- OPENAI_FUNCTIONS and OPENAI_MULTI_FUNCTIONS: These agents are specifically designed to work with certain OpenAI models like gpt-3.5-turbo-0613 and gpt-4-0613. These models are fine-tuned to detect when a function should be called and respond with the inputs required for the function. The OpenAI Functions and Multi-Functions Agents are optimized to interact seamlessly with these models, enabling efficient execution of specific tasks defined by the models’ functions.

Once initialized, the ReAct agent performs question-answering by processing the query “Author David Chanoff has collaborated with a U.S. Navy admiral who served as the ambassador to the United Kingdom under which President?” using the configured tools and the language model, generating a response based on the information available in the docstore.

from langchain.docstore.wikipedia import Wikipedia

from langchain.agents import initialize_agent, Tool, AgentExecutor

from langchain.agents.react.base import DocstoreExplorer

from langchain.agents import load_tools, AgentType

from langchain.chat_models import ChatOpenAI

# Uncomment if your are using AzureOpenAI

#from langchain.llms import AzureOpenAI

# build tools

docstore=DocstoreExplorer(Wikipedia())

tools = [

Tool(

name="Search",

func=docstore.search,

description="Search for a term in the docstore.",

),

Tool(

name="Lookup",

func=docstore.lookup,

description="Lookup a term in the docstore.",

)

]

OPENAI_KEY ="Your Open AI key"

# build LLM

llm = ChatOpenAI(openai_api_key=OPENAI_KEY,

temperature = 0,

model = "gpt-3.5-turbo")

# Uncomment if your are using AzureOpenAI

#llm = AzureOpenAI(

# deployment_name="davinci003-deploy",

# model_name="gpt3.5-turbo",

# temperature=0,

#)

# initialize ReAct agent

react = initialize_agent(tools = tools,

llm = llm,

agent = AgentType.REACT_DOCSTORE,

verbose = True,)

# perform question-answering

question = "Author David Chanoff has collaborated with a U.S. Navy admiral who served as the ambassador to the United Kingdom under which President?"

react.run(question)You can see below the agent’s thought process while looking for the answer to our question.

Finally, the output parser ecognize that the final answer is “Bill Clinton”, and the chain is completed.

You can see the output prompt template as below,

print(react.agent.llm_chain.prompt.template)

# Output

Question: What is the elevation range for the area that the eastern sector of the Colorado orogeny extends into?

Thought: I need to search Colorado orogeny, find the area that the eastern sector of the Colorado orogeny extends into, then find the elevation range of the area.

Action: Search[Colorado orogeny]

Observation: The Colorado orogeny was an episode of mountain building (an orogeny) in Colorado and surrounding areas.

Thought: It does not mention the eastern sector. So I need to look up eastern sector.

Action: Lookup[eastern sector]

Observation: (Result 1 / 1) The eastern sector extends into the High Plains and is called the Central Plains orogeny.

Thought: The eastern sector of Colorado orogeny extends into the High Plains. So I need to search High Plains and find its elevation range.

Action: Search[High Plains]

Observation: High Plains refers to one of two distinct land regions

Thought: I need to instead search High Plains (United States).

Action: Search[High Plains (United States)]

Observation: The High Plains are a subregion of the Great Plains. From east to west, the High Plains rise in elevation from around 1,800 to 7,000 ft (550 to 2,130 m).[3]

Thought: High Plains rise in elevation from around 1,800 to 7,000 ft, so the answer is 1,800 to 7,000 ft.

Action: Finish[1,800 to 7,000 ft]

Question: Musician and satirist Allie Goertz wrote a song about the "The Simpsons" character Milhouse, who Matt Groening named after who?

Thought: The question simplifies to "The Simpsons" character Milhouse is named after who. I only need to search Milhouse and find who it is named after.

Action: Search[Milhouse]

Observation: Milhouse Mussolini Van Houten is a recurring character in the Fox animated television series The Simpsons voiced by Pamela Hayden and created by Matt Groening.

Thought: The paragraph does not tell who Milhouse is named after, maybe I can look up "named after".

Action: Lookup[named after]

Observation: (Result 1 / 1) Milhouse was named after U.S. president Richard Nixon, whose middle name was Milhous.

Thought: Milhouse was named after U.S. president Richard Nixon, so the answer is Richard Nixon.

Action: Finish[Richard Nixon]

Question: Which documentary is about Finnish rock groups, Adam Clayton Powell or The Saimaa Gesture?

Thought: I need to search Adam Clayton Powell and The Saimaa Gesture, and find which documentary is about Finnish rock groups.

Action: Search[Adam Clayton Powell]

Observation: Could not find [Adam Clayton Powell]. Similar: ['Adam Clayton Powell III', 'Seventh Avenue (Manhattan)', 'Adam Clayton Powell Jr. State Office Building', 'Isabel Washington Powell', 'Adam Powell', 'Adam Clayton Powell (film)', 'Giancarlo Esposito'].

Thought: To find the documentary, I can search Adam Clayton Powell (film).

Action: Search[Adam Clayton Powell (film)]

Observation: Adam Clayton Powell is a 1989 American documentary film directed by Richard Kilberg. The film is about the rise and fall of influential African-American politician Adam Clayton Powell Jr.[3][4] It was later aired as part of the PBS series The American Experience.

Thought: Adam Clayton Powell (film) is a documentary about an African-American politician, not Finnish rock groups. So the documentary about Finnish rock groups must instead be The Saimaa Gesture.

Action: Finish[The Saimaa Gesture]

Question: What profession does Nicholas Ray and Elia Kazan have in common?

Thought: I need to search Nicholas Ray and Elia Kazan, find their professions, then find the profession they have in common.

Action: Search[Nicholas Ray]

Observation: Nicholas Ray (born Raymond Nicholas Kienzle Jr., August 7, 1911 - June 16, 1979) was an American film director, screenwriter, and actor best known for the 1955 film Rebel Without a Cause.

Thought: Professions of Nicholas Ray are director, screenwriter, and actor. I need to search Elia Kazan next and find his professions.

Action: Search[Elia Kazan]

Observation: Elia Kazan was an American film and theatre director, producer, screenwriter and actor.

Thought: Professions of Elia Kazan are director, producer, screenwriter, and actor. So profession Nicholas Ray and Elia Kazan have in common is director, screenwriter, and actor.

Action: Finish[director, screenwriter, actor]

Question: Which magazine was started first Arthur's Magazine or First for Women?

Thought: I need to search Arthur's Magazine and First for Women, and find which was started first.

Action: Search[Arthur's Magazine]

Observation: Arthur's Magazine (1844-1846) was an American literary periodical published in Philadelphia in the 19th century.

Thought: Arthur's Magazine was started in 1844. I need to search First for Women next.

Action: Search[First for Women]

Observation: First for Women is a woman's magazine published by Bauer Media Group in the USA.[1] The magazine was started in 1989.

Thought: First for Women was started in 1989. 1844 (Arthur's Magazine) < 1989 (First for Women), so Arthur's Magazine was started first.

Action: Finish[Arthur's Magazine]

Question: Were Pavel Urysohn and Leonid Levin known for the same type of work?

Thought: I need to search Pavel Urysohn and Leonid Levin, find their types of work, then find if they are the same.

Action: Search[Pavel Urysohn]

Observation: Pavel Samuilovich Urysohn (February 3, 1898 - August 17, 1924) was a Soviet mathematician who is best known for his contributions in dimension theory.

Thought: Pavel Urysohn is a mathematician. I need to search Leonid Levin next and find its type of work.

Action: Search[Leonid Levin]

Observation: Leonid Anatolievich Levin is a Soviet-American mathematician and computer scientist.

Thought: Leonid Levin is a mathematician and computer scientist. So Pavel Urysohn and Leonid Levin have the same type of work.

Action: Finish[yes]

Question: {input}

{agent_scratchpad}

The below example, represents a symbiotic relationship where ideas are translated into actions, all thanks to the intricate dance between LLM, external tools, and the LangChain framework.

Build Your Own Custom Tools and Agents

You can use the following custom agents in LangChain.

- Self-Ask Agent with Search Tool (self-ask-with-search):

- Answers open questions using a search tool.

- Utilizes LangChain’s wrapper class for effective collaboration with Duckduckgo.

- Random Number Generator Agent:

This agent generates random numbers in response to user requests. It provides a random number when asked and serves as a simple random number generator. - Philosophical Inquiry Agent:

This agent handles deep philosophical inquiries, specifically focusing on the meaning of life. It explores various philosophical and religious perspectives, emphasizing the subjective nature of this profound question.

Let’s start, by setting up an instance of the ChatOpenAI class with specific parameters like temperature and model name (‘gpt-3.5-turbo’).

Using temperature parameter we can control the randomness of the model’s output. A higher temperature (e.g., 0.8) makes the output more diverse and creative but might be less focused and coherent. On the other hand, a lower temperature (e.g., 0.2) produces more deterministic and focused responses, but they might be repetitive.

from langchain.chat_models import ChatOpenAI

from langchain.chains.conversation.memory import ConversationBufferMemory

OPENAI_KEY ="Enter your open ai key"

# Define the LLM

llm = ChatOpenAI(

temperature = 0,

model_name='gpt-3.5-turbo',

openai_api_key=OPENAI_KEY,

)

Creating a custom agent with LangChain tools

Now, we are going to set up a tool that will use DuckDuckGo to search anything on the internet to fetch information related to specific queries, particularly those concerning current events. We are utilizing the prebuilt LangChain tool, DuckDuckGoSearchResults, and providing a description so that the Large Language model knows when to call it.

# Creating a custom agent with langchain tools

from langchain.tools import DuckDuckGoSearchResults

from langchain.agents import Tool

from langchain.tools import BaseTool

duck_search = DuckDuckGoSearchResults()

search = Tool(

name = "search",

func = duck_search.run,

description = "Useful for when you need to answer questions about current events. You should ask target questions"

)

Creating a custom agent with custom tools

We are creating two custom tools. The first tool, named “Meaning of Life,” uses the GPT-3.5 Turbo model to answer questions about the meaning of life. It takes a user-provided question as input and generates a relevant response based on a custom prompt. The second tool, “Random number,” generates a random number between 1 and 10 when prompted with the word ‘random’.

These tools are encapsulated in the ‘life_tool’ and ‘random_tool’ objects, respectively. Subsequently, these tools are combined into a list. An agent is initialized using LangChain’s ‘initialize_agent’ function, incorporating the defined tools, a specified language model (‘llm’), and other parameters such as maximum iterations and memory configuration.

After that we are going to create an agent designed for conversational interactions, enabling users to inquire from all three tools search, random_tool, and life_tool.

We are also setting up memory for the conversation using ConversationBufferMemory from langchain. This memory mechanism, denoted by the key ‘chat_history’, plays a crucial role in retaining and utilizing past interactions during the conversation.

The ‘k’ parameter within the ‘ConversationBufferMemory’ class specifies the depth of the conversation history that the agent will remember. In this case, it’s set to 5, meaning the agent will consider the five most recent messages in its conversation history.

This memory functionality is vital for maintaining context within the conversation. When the user interacts with the agent, the agent can recall and reference these past messages, ensuring that the conversation flows coherently. By retaining a limited history of interactions, the agent can provide contextually relevant responses, enhancing the overall user experience.

import openai

openai.api_key = OPENAI_KEY

# What the tool suppose to do

def meaning_of_life(input = ""):

prompt = f"{input}"

messages = [{"role":"user","content": prompt}]

response = openai.ChatCompletion.create(

model = "gpt-3.5-turbo",

messages = messages,

temperature = 0,

)

return response.choices[0].message["content"]

# Creating a custom tool which will answer questions about Meaning of Life Inquiry

life_tool = Tool(

name = "Meaning of Life",

func = meaning_of_life,

description = "Useful for when you need to answer questions about the meaning of life. You should ask targeted questions. input should be the question."

)

import random

# This tool will generate Random numbers

def random_num(input = ""):

return random.randint(1,10)

random_tool = Tool(

name = "Random number",

func = random_num,

description = "Useful for when you need to get a random number. input should be 'random'"

)

# Creating an Agent

from langchain.agents import initialize_agent

tools = [search, random_tool, life_tool]

# Conversational agent memory

memory = ConversationBufferMemory(

memory_key = 'chat_history',

k = 5,

return_messages = True,

)

# Create our agent

conversational_agent = initialize_agent(

agent = 'chat-conversational-react-description',

tools = tools,

llm = llm,

verbose = True,

max_iterations = 3,

early_stopping_method = 'generate',

memory = memory,

)

Creating a custom agent with langchain duckduckgo tool

In the below example, the user asks for the current time in London. The AI system performs a search, extracts relevant information, and replies with the accurate local time, considering the solar time difference.

# Creating a custom agent with langchain tools

conversational_agent("What time is it in London?")

# Internal logs

> Entering new AgentExecutor chain...

{

"action": "search",

"action_input": "current time in London"

}

Observation: [snippet: Current local time in United Kingdom - England - London. Get London's weather and area codes, time zone and DST. Explore London's sunrise and sunset, moonrise and moonset., title: Current Local Time in London, England, United Kingdom, link: https://www.timeanddate.com/worldclock/uk/london], [snippet: The graph above illustrates clock changes in London during 2023. London in GMT Time Zone. London uses Greenwich Mean Time (GMT) during standard time and British Summer Time (BST) during Daylight Saving Time (DST), or summer time.. The Difference between GMT and UTC. In practice, GMT and UTC share the same time on a clock, which can cause them to be interchanged or confused., title: Time Zone in London, England, United Kingdom - timeanddate.com, link: https://www.timeanddate.com/time/zone/uk/london], [snippet: The current local time in London is 51 minutes ahead of apparent solar time. London on the map. London is the capital of United Kingdom. Latitude: 51.51. Longitude: -0.13; Population: 8,962,000; Open London in Google Maps. Best restaurants in London #1 Buen Ayre - Argentine and steakhouses food, title: Time in London, United Kingdom now, link: https://time.is/London], [snippet: View current time in London - England, United Kingdom. Time difference from GMT/UTC: +01:00 hours. United Kingdom is currently observing Daylight Saving Time (DST). London time zone converter to other locations., title: Time now in London, - GMT - World Time & Converters, link: https://greenwichmeantime.com/time/united-kingdom/london/]

Thought:{

"action": "Final Answer",

"action_input": "The current time in London is 51 minutes ahead of apparent solar time."

}

> Finished chain.

# Output

{'input': 'What time is it in London?',

'chat_history': [HumanMessage(content='What time is it in London?', additional_kwargs={}, example=False),

AIMessage(content='The current time in London is 51 minutes ahead of apparent solar time.', additional_kwargs={}, example=False)],

'output': 'The current time in London is 51 minutes ahead of apparent solar time.'}Creating a custom agent with langchain which will generate a random Number

This agent generates random numbers by using the random_tool.

# Creating a custom agent with langchain tools

conversational_agent("Can you give me a random number?")

# Internal logs

> Entering new AgentExecutor chain...

{

"action": "Random number",

"action_input": "random"

}

Observation: 4

Thought:{

"action": "Final Answer",

"action_input": "4"

}

> Finished chain.

# Output

{'input': 'Can you give me a random number?',

'chat_history': [HumanMessage(content='What time is it in London?', additional_kwargs={}, example=False),

AIMessage(content='The current time in London is 51 minutes ahead of apparent solar time.', additional_kwargs={}, example=False),

HumanMessage(content='Can you give me a random number?', additional_kwargs={}, example=False),

AIMessage(content='4', additional_kwargs={}, example=False)],

'output': '4'}Creating a custom agent with langchain that will answer user queries about the meaning of life

The agent delves into philosophical perspectives, including existentialism, Eastern philosophies, and the subjective nature of life’s purpose. It emphasizes personal exploration and fulfillment in existence.

prompt_template = """You're now PhiloGPT — an expert in philosophy and metaphysical matters. Henceforth, respond with ponder-worthy, world-class answers whenever I ask you a question.

To formulate your answers, feel free to tap into every single school of thought, philosophy, and religion known to man. You can also use your own creativity and judgment. Pack your answers into 3 paragraphs of 2 to 3 sentences each for easy readability and understanding.

Please stick to the above rules and requests for every prompt I provide you henceforth."""

conversational_agent.agent.llm_chain.prompt.messages[0].prompt.template = prompt_template

# Creating a custom agent with langchain tools

conversational_agent("What is the meaning of life?")

# Internal logs

> Entering new AgentExecutor chain...

{

"action": "Meaning of Life",

"action_input": "What is the meaning of life?"

}

Observation: The meaning of life is a profound question that has captivated the minds of philosophers, theologians, and thinkers throughout history. From an existentialist perspective, life has no inherent meaning, and it is up to each individual to create their own purpose and find fulfillment in their existence. Drawing from Eastern philosophies, life can be seen as a journey of self-discovery and enlightenment, where the ultimate goal is to transcend suffering and achieve liberation from the cycle of rebirth. Alternatively, some religious perspectives posit that the meaning of life lies in fulfilling a divine purpose or following a set of moral principles to attain salvation or union with a higher power. Ultimately, the meaning of life is a deeply personal and subjective quest, and it is up to each individual to explore and find their own unique answer.

In the grand tapestry of existence, the meaning of life can also be understood through the lens of interconnectedness and interdependence. From a Buddhist perspective, life is characterized by the inherent suffering caused by attachment and desire. By cultivating mindfulness and compassion, one can transcend the ego and realize the interconnectedness of all beings, finding meaning in alleviating suffering and promoting harmony. Similarly, from a Hindu perspective, life is a continuous cycle of birth, death, and rebirth, driven by the law of karma. The purpose of life is to learn and evolve spiritually, ultimately attaining moksha, liberation from the cycle of reincarnation. In this view, the meaning of life lies in fulfilling one's dharma, or duty, and seeking union with the divine.

Ultimately, the meaning of life is a deeply personal and subjective inquiry, as diverse as the individuals who ponder it. It is a question that invites us to explore our values, beliefs, and aspirations, and to seek a sense of purpose and fulfillment in our existence. Whether we find meaning through personal achievements, relationships, spiritual pursuits, or the pursuit of knowledge, the quest for meaning is an ongoing journey that enriches our lives and gives depth to our human experience.

Thought:{

"action": "Final Answer",

"action_input": "The meaning of life is a deeply personal and subjective inquiry, as diverse as the individuals who ponder it. It is a question that invites us to explore our values, beliefs, and aspirations, and to seek a sense of purpose and fulfillment in our existence. Whether we find meaning through personal achievements, relationships, spiritual pursuits, or the pursuit of knowledge, the quest for meaning is an ongoing journey that enriches our lives and gives depth to our human experience."

}

> Finished chain.

# Output

{'input': 'What is the meaning of life?',

'chat_history': [HumanMessage(content='What is the meaning of life?', additional_kwargs={}, example=False),

AIMessage(content="The meaning of life is a profound question that has captivated the minds of philosophers, theologians, and thinkers throughout history. From an existentialist perspective, life has no inherent meaning, and it is up to each individual to create their own purpose and find fulfillment in their existence. Drawing from Eastern philosophies, life can be seen as a journey of self-discovery and enlightenment, where the ultimate goal is to transcend suffering and achieve liberation from the cycle of rebirth. Alternatively, some religious perspectives posit that the meaning of life lies in fulfilling a divine purpose or following a set of moral principles to attain salvation or union with a higher power. Ultimately, the meaning of life is a deeply personal and subjective quest, and it is up to each individual to explore and find their own unique answer.\n\nIn the grand tapestry of existence, the meaning of life can also be understood through the lens of interconnectedness and interdependence. From a Buddhist perspective, life is characterized by the inherent suffering caused by attachment and desire. By cultivating mindfulness and compassion, one can transcend the ego and realize the interconnectedness of all beings, finding meaning in alleviating suffering and promoting harmony. Similarly, from a Hindu perspective, life is a continuous cycle of birth, death, and rebirth, driven by the law of karma. The purpose of life is to learn and evolve spiritually, ultimately attaining moksha, liberation from the cycle of reincarnation. In this view, the meaning of life lies in fulfilling one's dharma, or duty, and seeking union with the divine.\n\nUltimately, the meaning of life is a deeply personal and subjective inquiry, as diverse as the individuals who ponder it. It is a question that invites us to explore our values, beliefs, and aspirations, and to seek a sense of purpose and fulfillment in our existence. Whether we find meaning through personal achievements, relationships, spiritual pursuits, or the pursuit of knowledge, the quest for meaning is an ongoing journey that enriches our lives and gives depth to our human experience.", additional_kwargs={}, example=False),

HumanMessage(content='What is the meaning of life?', additional_kwargs={}, example=False),

AIMessage(content='The meaning of life is a deeply personal and subjective inquiry, as diverse as the individuals who ponder it. It is a question that invites us to explore our values, beliefs, and aspirations, and to seek a sense of purpose and fulfillment in our existence. Whether we find meaning through personal achievements, relationships, spiritual pursuits, or the pursuit of knowledge, the quest for meaning is an ongoing journey that enriches our lives and gives depth to our human experience.', additional_kwargs={}, example=False)],

'output': 'The meaning of life is a deeply personal and subjective inquiry, as diverse as the individuals who ponder it. It is a question that invites us to explore our values, beliefs, and aspirations, and to seek a sense of purpose and fulfillment in our existence. Whether we find meaning through personal achievements, relationships, spiritual pursuits, or the pursuit of knowledge, the quest for meaning is an ongoing journey that enriches our lives and gives depth to our human experience.'}Conclusion: The ReACT Revolution

In conclusion, ReACT is more than just a concept; it’s a revolution in the world of language models. By seamlessly combining reasoning and actions, ReACT empowers models to excel in a wide range of tasks.

As the field of natural language processing continues to evolve, ReACT represents an exciting frontier with limitless potential. Understanding and harnessing the power of ReACT can take your LLM projects to new heights, making them more versatile and impactful than ever before. Future research will undoubtedly build upon ReAct’s foundation, leading to even more exciting applications in the world of AI.

As we reach the end, I want to express my heartfelt thanks for joining me on this adventure. Your time and engagement mean the world. If you have any thoughts or suggestions, please share them in the comments below; your insights fuel this community.

I’d love to connect further, so feel free to follow me on LinkedIn through the link. Your presence and feedback are the beating heart of this space. Thank you for being a part of this enriching journey. Until next time, here’s to more shared discoveries!

Reference:

- React Paper: https://arxiv.org/abs/2210.03629

- react-lm github

- https://www.promptingguide.ai/techniques/react

- ReAct: Synergizing Reasoning and Acting in Language Models – Google

- A simple Python implementation of the ReAct pattern for LLMs

- Generative AI for SAP Part IV. LLMs orchestration using ReAct, Agents and ToT

- How to ReAct To Simple AI Agents